I've been doing something for the past few months. And I caught it last week as I was using Claude (which knows me as El Capitan, because I'm not volunteering all my info to AI companies) to help refine and reposition the Bullseye Customer Sprint landing page at howthisworks.co. Within a longer thread, I had typed: "Given that my bullseye customer for this service is a funded founder or bootstrapped founder, how might we frame the benefits section more directly?"

Yep, that's right, I was using 'design thinking’ language in my AI process. But it’s not for the AI's benefit. It was for me.

What I noticed

I've been using AI tools daily for months now and I started noticing a pattern in which prompts actually helped me think versus which ones just made me a receiver of whatever came back.

When I prompt with something like "how should I..." or "what's the best way to…," I'm more likely to take whatever comes back and just run with it. After all, the AI spit out an answer, so I'm following the instructions. Bee-boop.

But when I prompt with "how might we..." or "given X context, what are different ways to…," something else happens. I'm requesting options to evaluate, looking for multiple approaches that might work — things I need to think about and possibly adapt to this specific situation.

The AI isn't necessarily generating different quality output. I'm receiving it differently. (And sometimes, I am very explicit. I request three (3) versions back exploring different angles for these kinds of audiences.)

Lazy thinking

Here's what I've learned from working in product and design for almost 15 years: there are unlimited wrong options for any problem, but some undiscovered number of right ones, let’s say a thousand as an example. And most people and teams stop at the first right one. They find something that works, something that makes sense and checks the boxes. And they move on.

But that first answer that could work? It's rarely right. Nor is it the strongest option. It's just... fine.

This is exactly what happens when you prompt AI for "the best way" to do something. You get back a reasonable, middling answer from its machine-learned catacombs. It’ll probably work. You implement it. Done. You move on.

And this is what most people do when they’re searching for something online. Got it, found it. Except you just picked from thousands of potentially good solutions without exploring which one actually fits your specific context best. Even a speedy evaluation.

Most people stop at the first right path — but what if you explored both directions before deciding? Or even better, kept going to find more paths?

In practice

In working on that Bullseye Customer Sprint positioning example from the opening, I could've prompted: "Generate a benefits section for this customer research sprint as a service aimed at founders." And that would've given me something reasonable. Something that could’ve worked. First right answer, maybe even refined it a bit, and done.

Instead I prompted with specific context and framing: "Given that my bullseye customer for the current version of the bullseye customer sprint is a funded founder or bootstrapped founder, how might we frame the benefits section to speak directly to their specific contextual pressures around runway and product-market fit?"

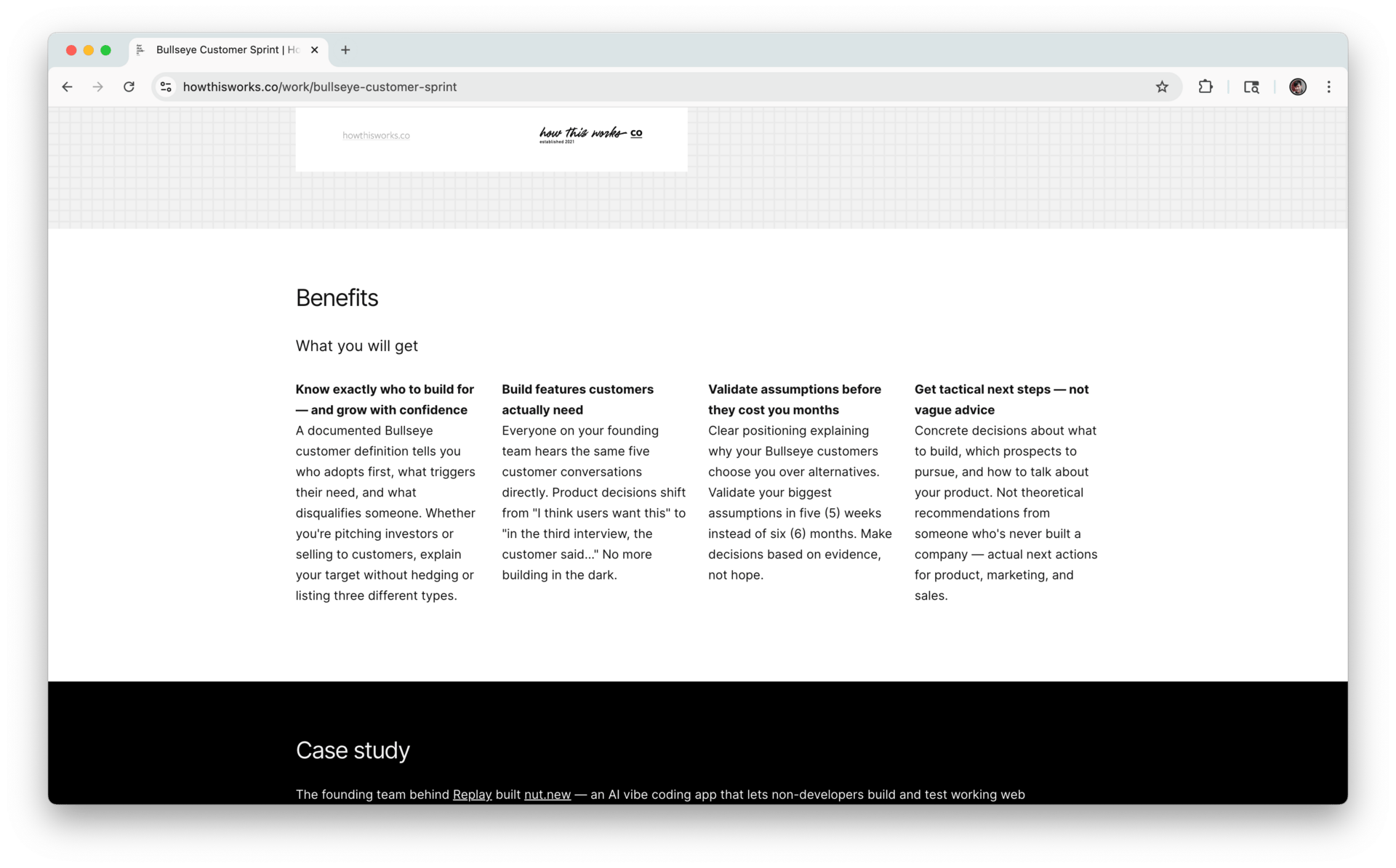

I also dropped in a few conversation details from notes with previous and current clients. What came back was a set of strategic directions I could evaluate:

Lead with clarity over ambiguity — know exactly who adopts first vs. hedging with "well, it could be for several types of users"

Frame around evidence-based decisions — shift from "I think users want this" to actual things customers say

Position against guessing and hope — validate assumptions in weeks instead of months, run the numbers here

Emphasize tactical outcomes over theory — concrete next steps, not vague advice from people who've never built companies

Connect to what founders actually worry about — investor pitches, team alignment, not stumbling around in the dark

Five (5) directions. All of them could work. But none were the "best." I needed to consider which angle actually resonated with the founders I've been working with and talking to. So, I ended up weaving elements from all the options into four (4) benefits currently on the page, plus adding details the AI hadn't suggested but that the initial outputs made me think of.

The final benefits section — combining elements from five (5) strategic directions that Claude suggested, plus angles that came from thinking through the options

Therein lies the difference. I was coordinating and staying curious.

(As I often do, I also had my writing project inside of Claude critique the page against my howthisworks.co homepage and design sprint page. "Critique this" is one of my most used prompts. Whether it’s against something the AI’s just generated or something I’ve written or spoken.)

Ready to apply facilitation principles to how you're framing problems and exploring solutions? Let's talk about how this shows up in your product work

The thing I don't hear much about

Everyone's (still) obsessing over prompt engineering — the perfect phrasing to get the AI to generate better output. Well, this works better on Sonnet 4.5. Or this works better on Gemini. But I don't think this is the biggest tension. Better output doesn't guarantee better outcomes. I think it's more important to think about what comes back. Whether you treat it as instructions to follow or options to evaluate. Whether you stay engaged with your own judgment or outsource your thinking.

I've seen people generate ace AI output and then implement it poorly because they never questioned whether it actually fit their context. They found a right answer and stopped thinking.

I've also seen people take mediocre AI output and use it well because they stayed in conversation with it — pushing back, adapting, and combining it with their own experience.

The quality of your thinking matters more than the quality of the prompt.

Something else to consider

"I'm not here to be right, I'm here to get it right"

Brown's distinction captures exactly what shifts when you change how you prompt AI tools. When you ask "what's the best way," you're asking the AI to be right. And when you ask "how might we" or "what are different approaches," you're trying to get it right for your specific context.

The first approach stops at the answer. The second approach starts a conversation about what actually works.

Try this

Next time you're about to prompt an AI tool with "What's the best way to..." or "How should I..." pause for a moment. Try rephrasing it as exploration: "What might work here?" or "What are different approaches to..." or "Given [your specific context], how might we..."

Even better? Talk it through with another human being first. Someone who knows your context, your constraints, your customers. AI can generate options, but another person can push back, ask clarifying questions, and help you think — not just respond.

See if it changes how you receive what comes back. See if it keeps you more engaged with your own judgment instead of deferring to the AI's authority.

See if you push past that first reasonable answer to find something that works better.

Because these tools are powerful. But they're most powerful when they enhance your thinking, not replace it. When they help you find better outcomes, not just faster outputs.

My question to you: How are you prompting AI tools? Are you asking for answers or exploring options? Hit reply and tell me about how the framing changes how you use what comes back. I’m curious. Also, do you have a library of prompts? Or are you writing from memory each time?

If someone forwarded this to you and you want more of these thoughts on the regular, subscribe here.

Until next time,

Skipper Chong Warson

Making product strategy and design work more human — and impactful